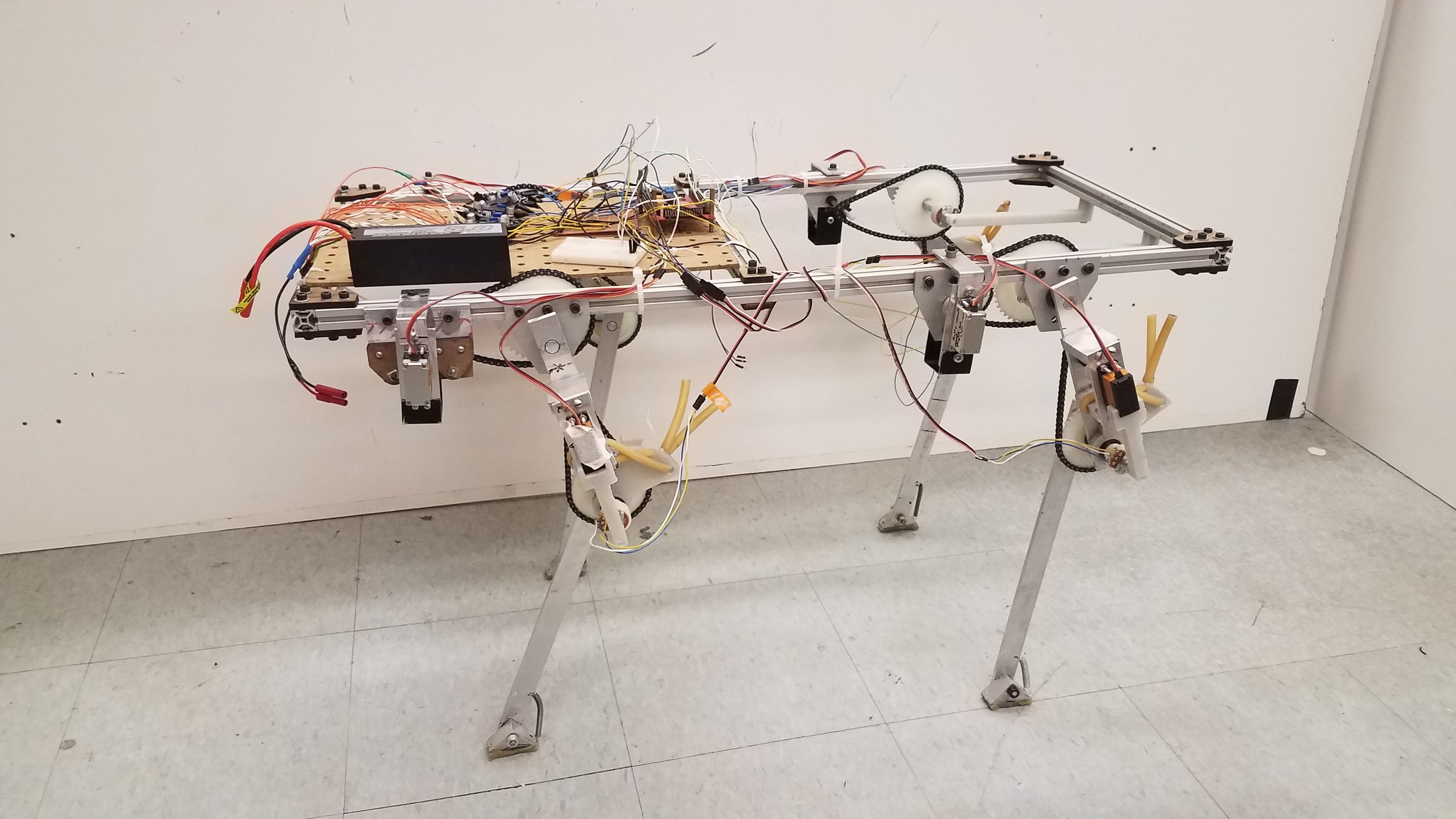

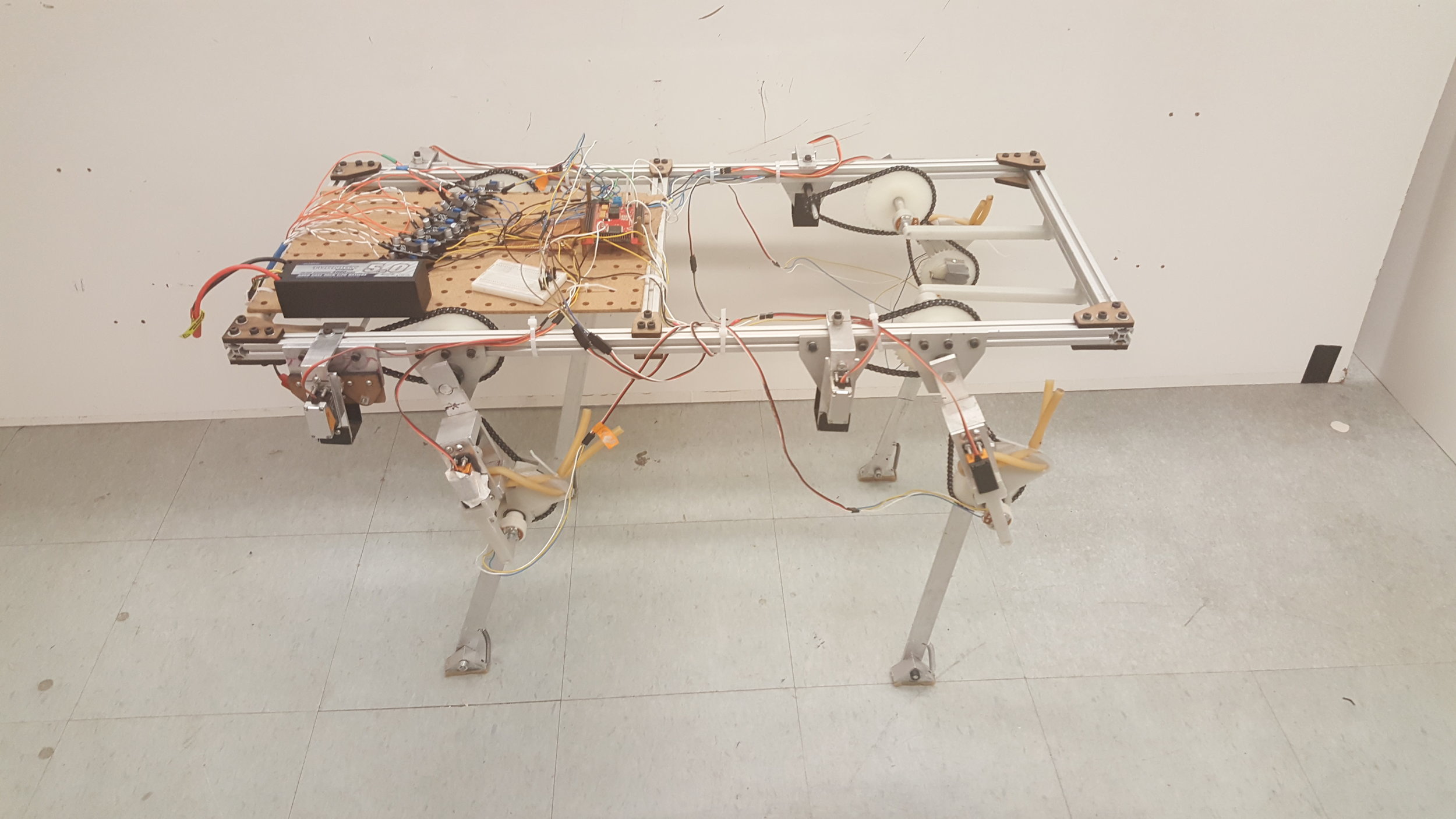

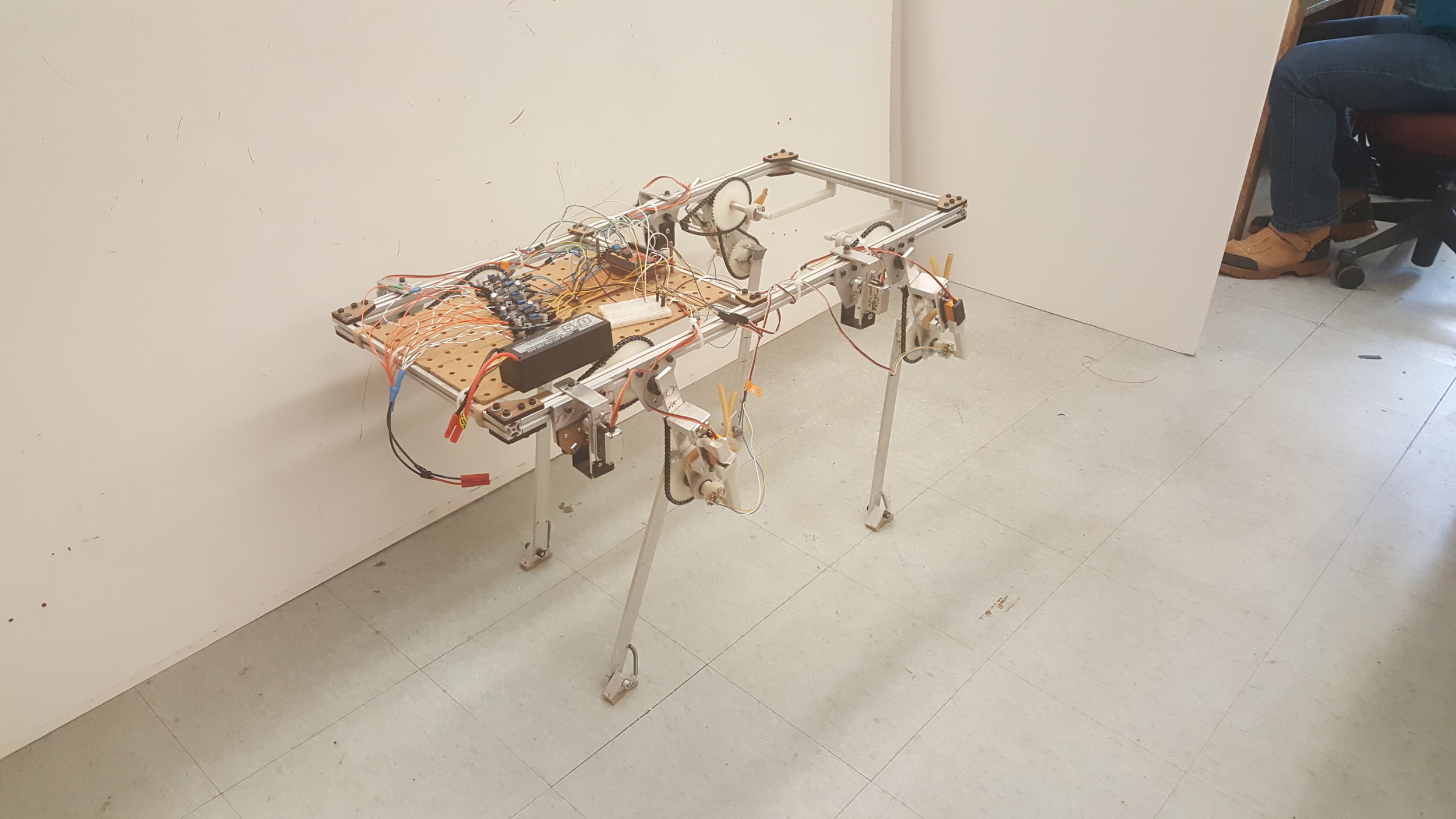

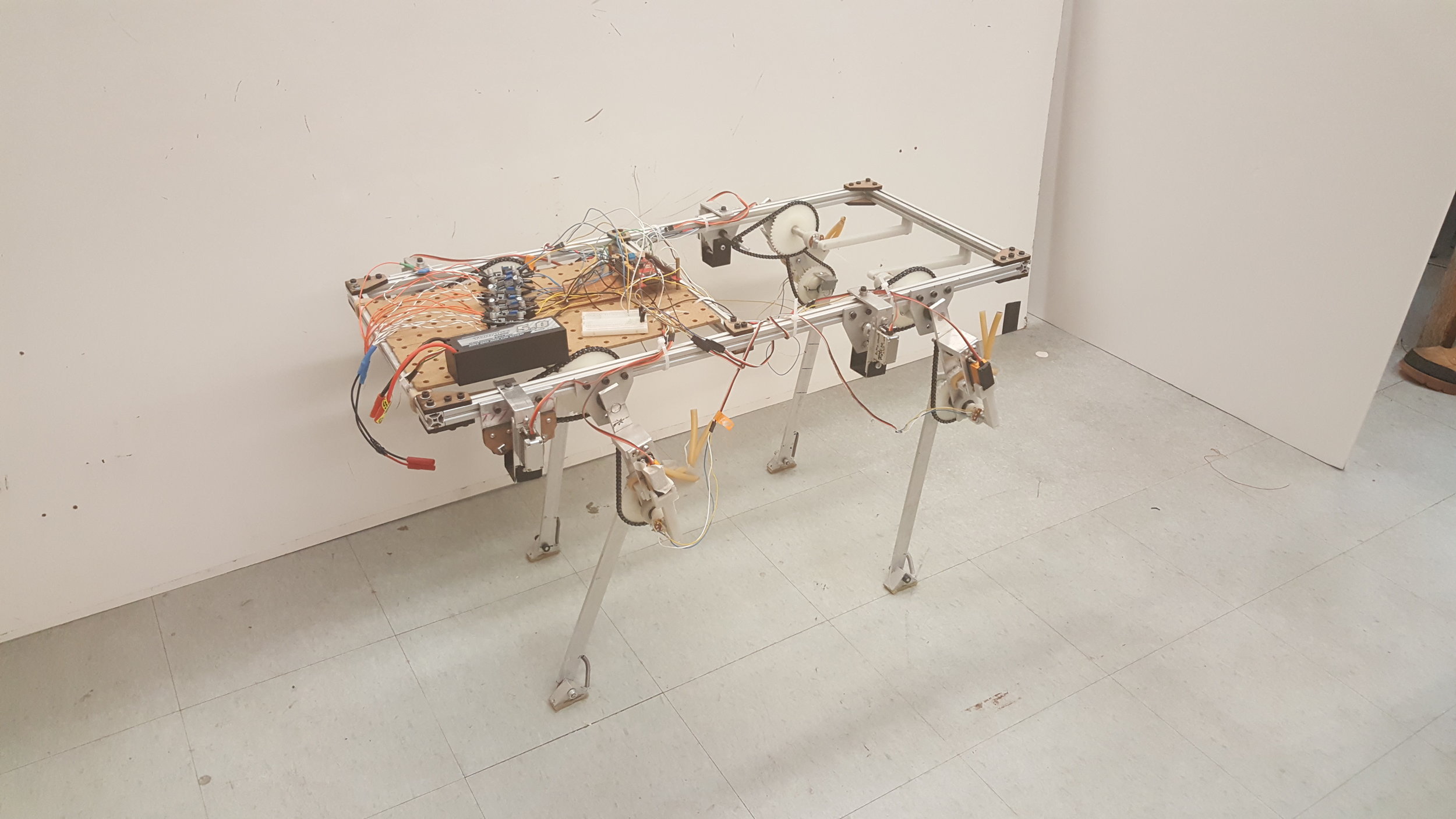

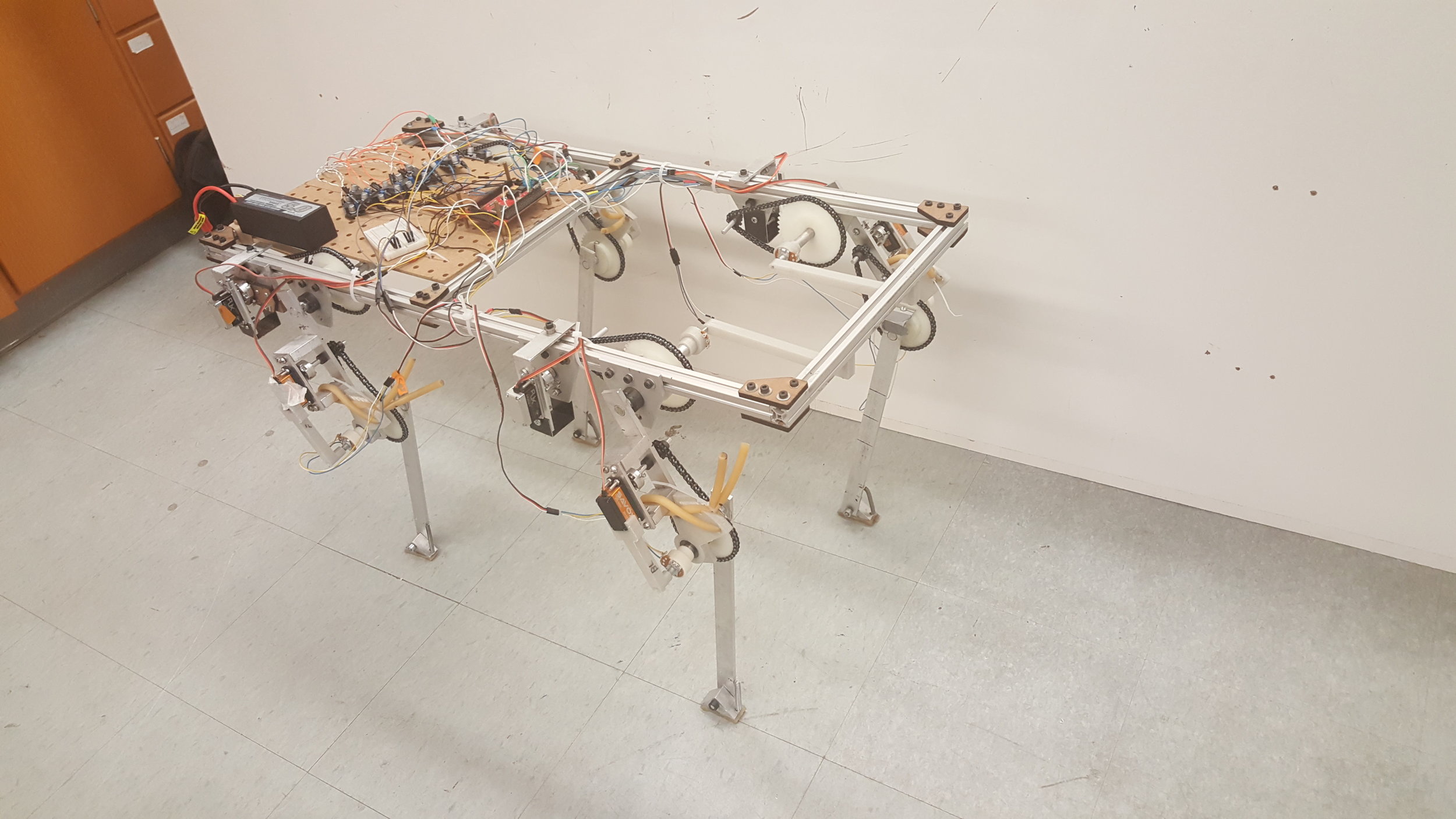

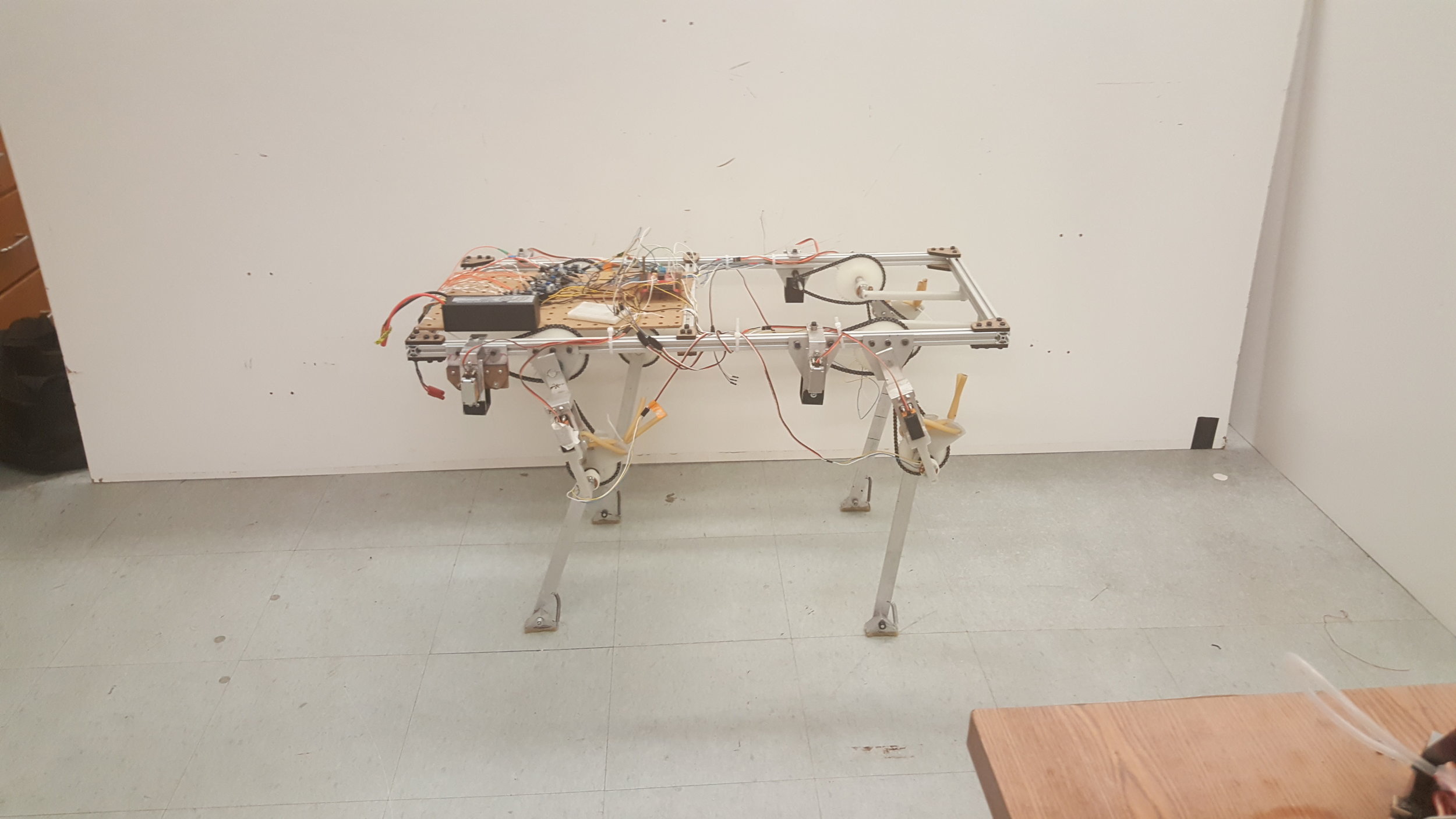

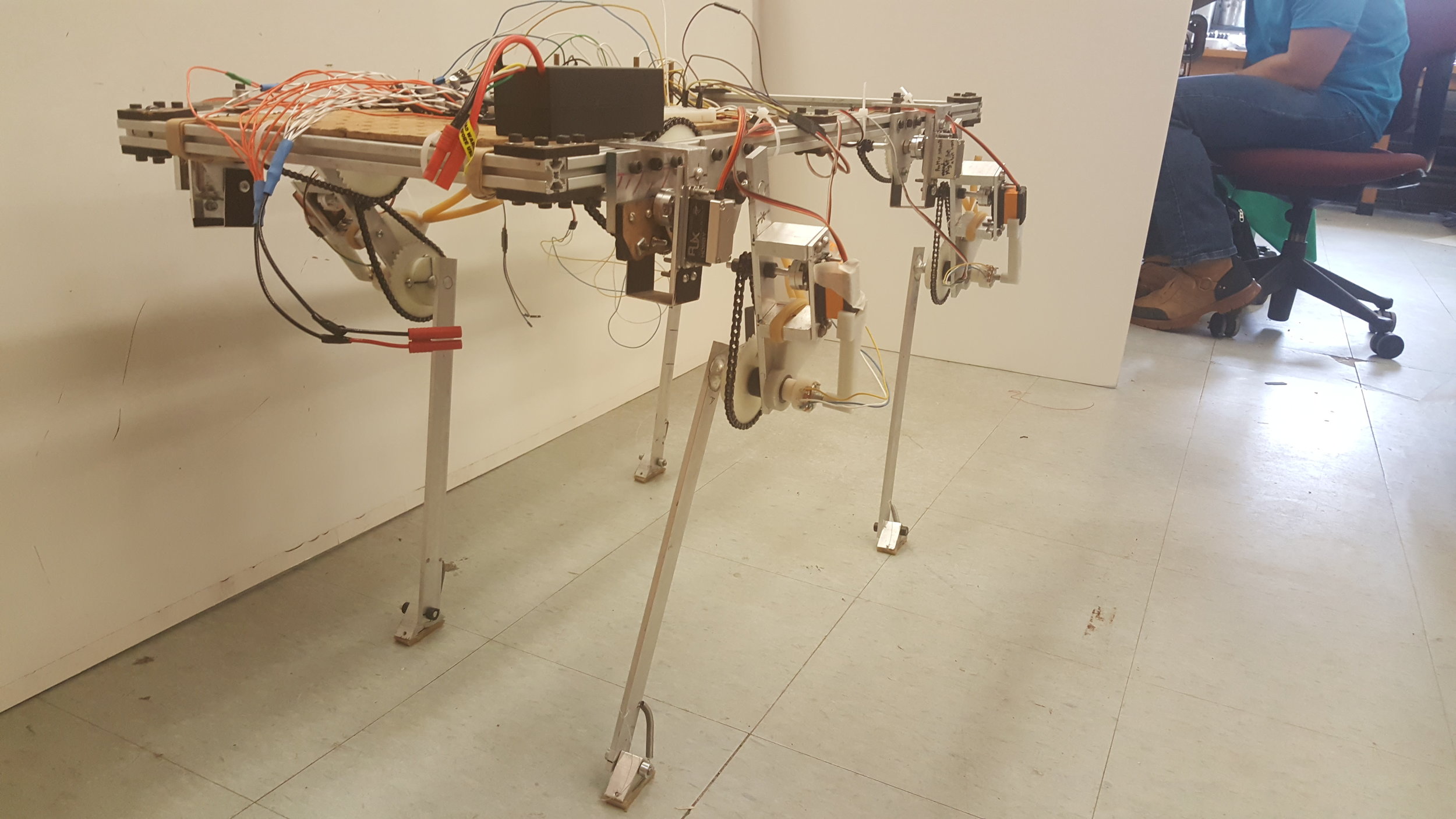

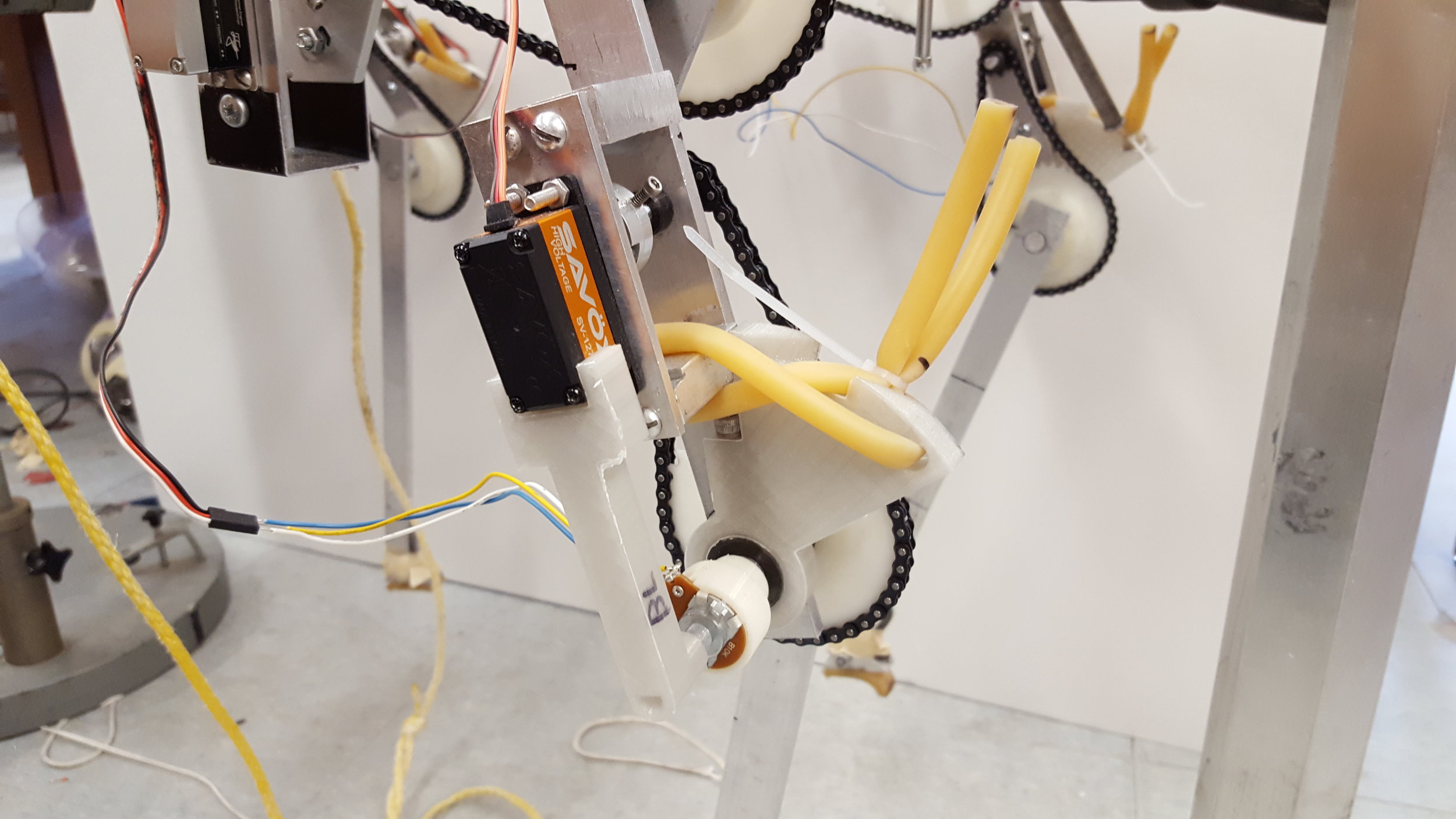

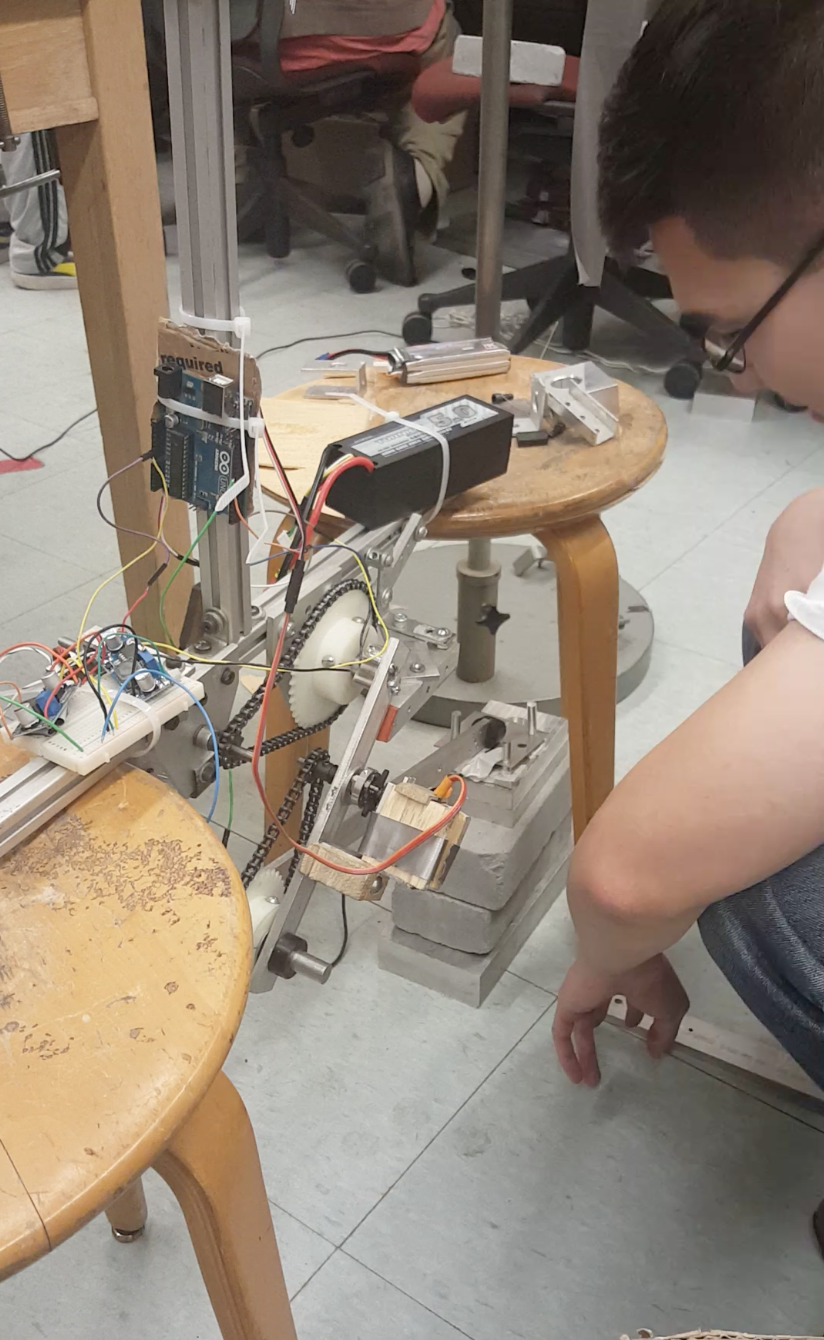

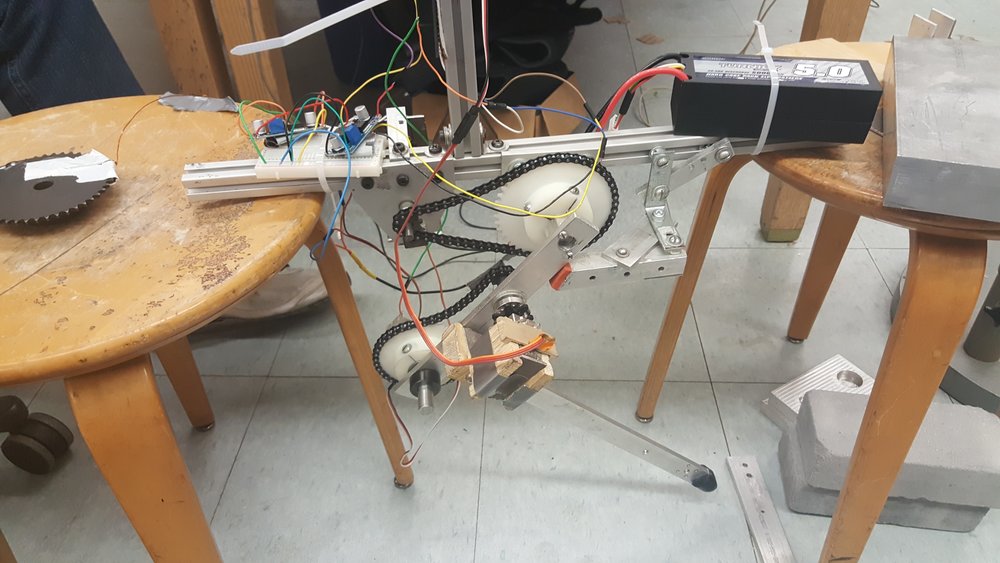

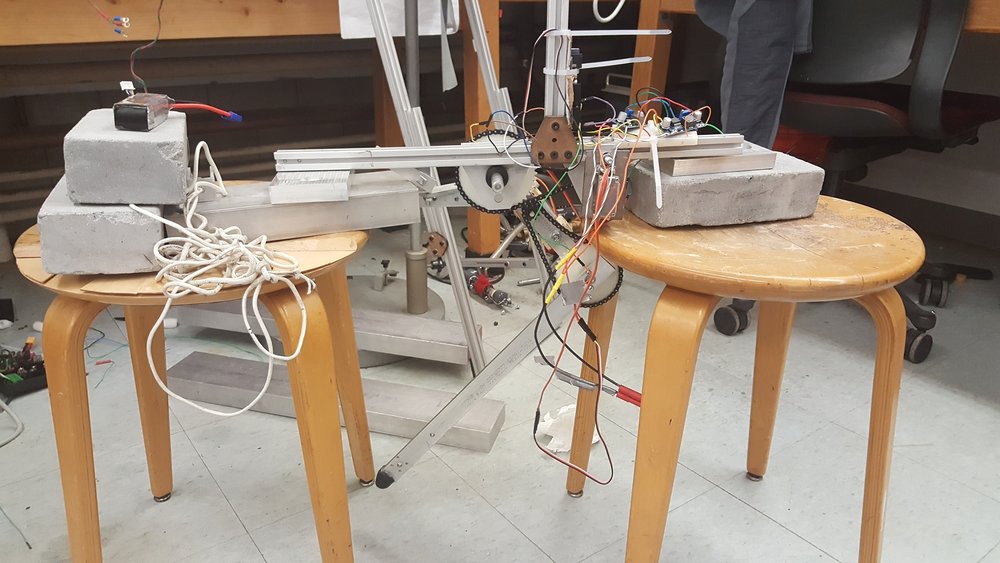

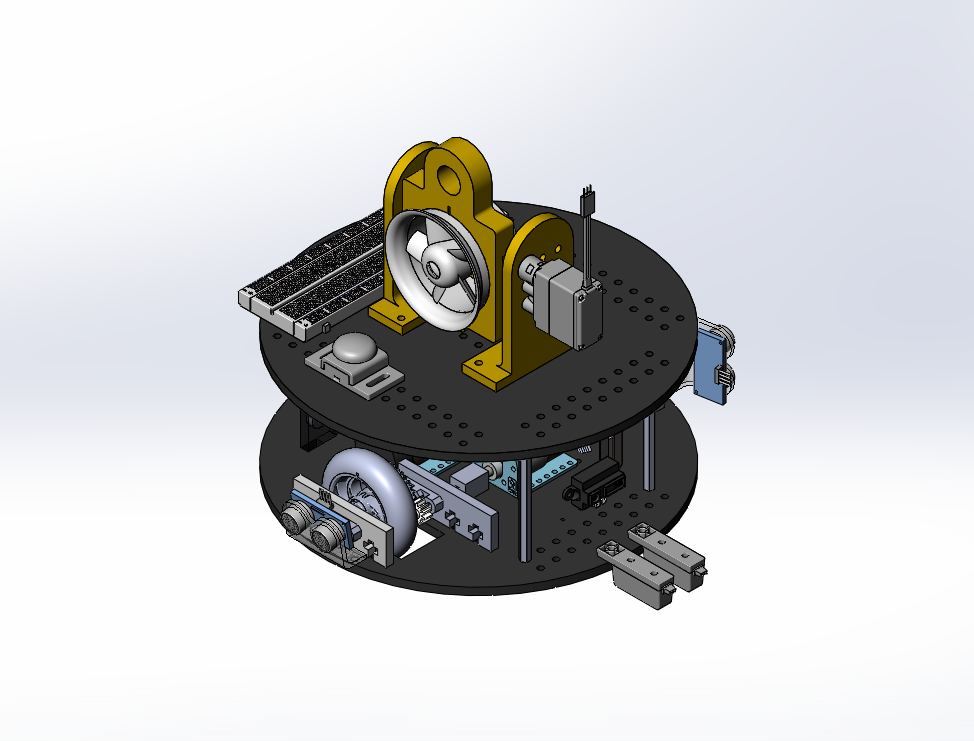

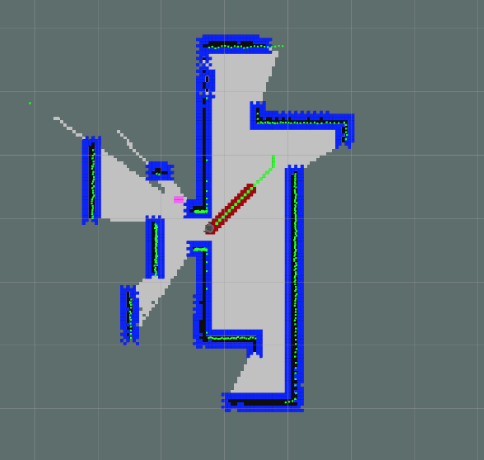

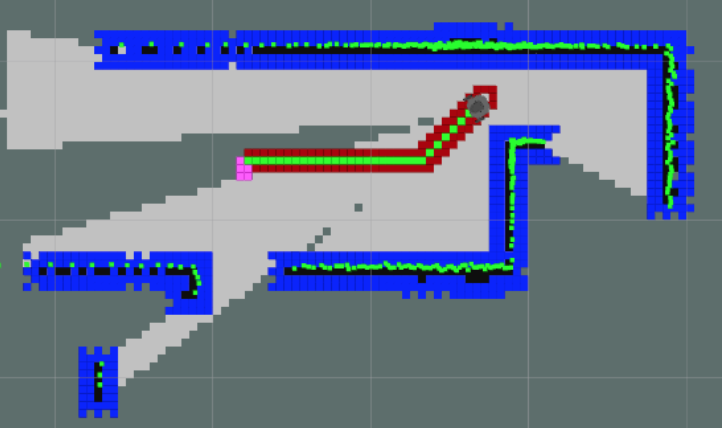

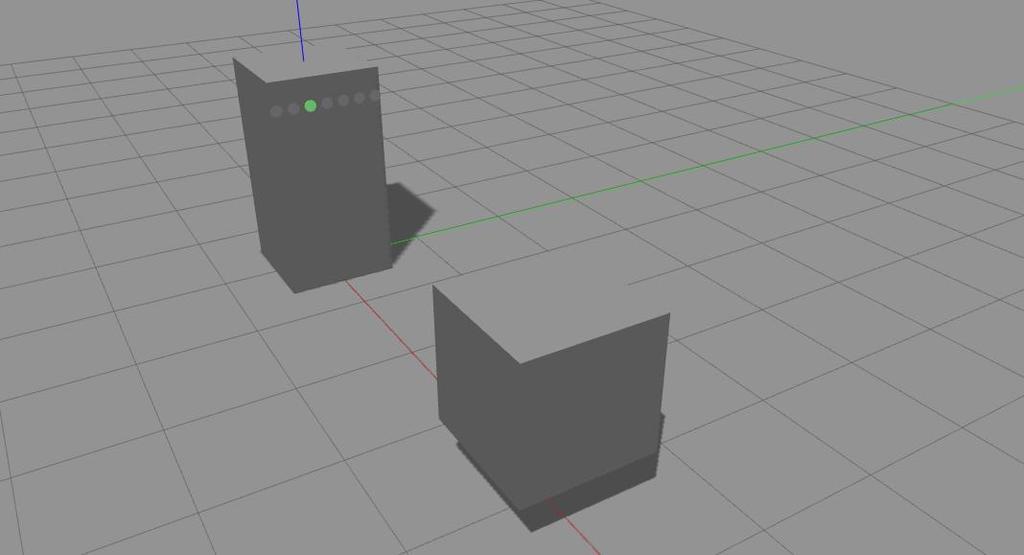

For my senior thesis project, I lead a team of six in developing a novel concept of a heterogeneous, distributed platform for autonomous 3D construction. This project led to a journal publication in IEEE Robotics Automation and Letters (RAL) as well as won an award at WPI. The platform is composed of two types of robots acting in a coordinated and complementary fashion: (i) A collection of communicating smart construction blocks behaving as a form of growable smart matter, and capable of planning and monitoring their own state and the construction progress; and (ii) A team of inchworm-inspired builder robots designed to navigate and modify the 3D structure, following the guidance of the smart blocks. In addition to leading the team, I was responsible for the development of the distributed construction algorithm, several control algorithms used onboard the robots, as well as a custom simulator for viewing the progress of the swarm.

See the publication here.

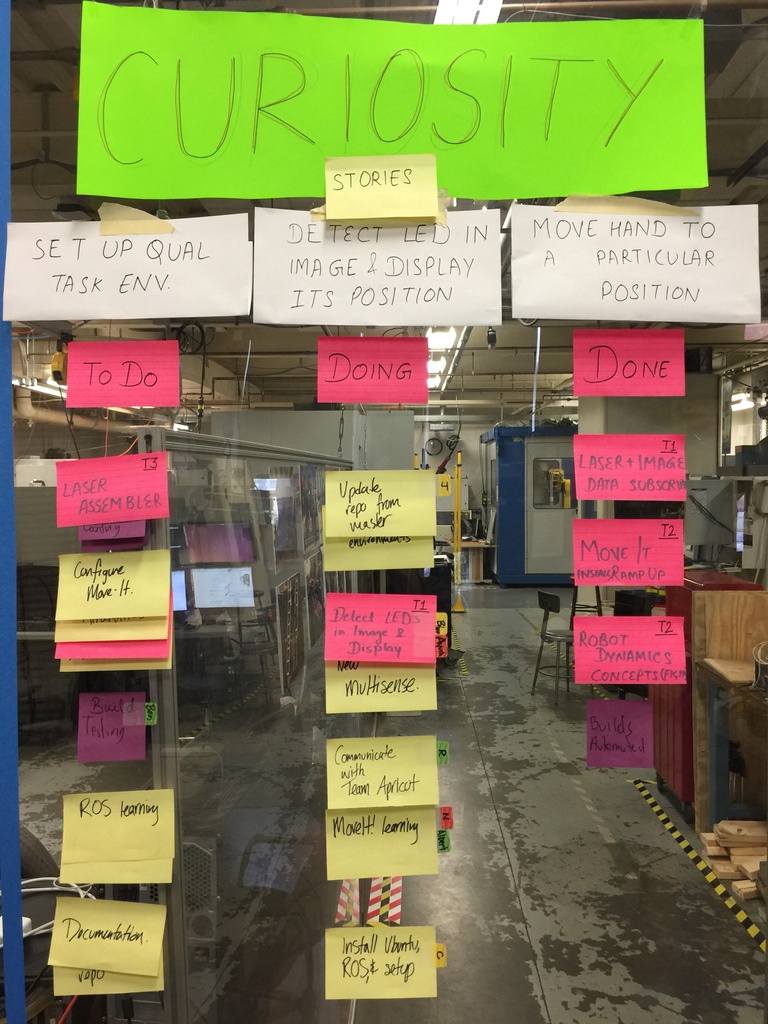

I also have experience with swarm (multi-agent) robotics involves working as a developer for NEST Labs at WPI. In this lab, I have contributed to two projects. This project involved developing a ranking algorithm to distribute tuples across a robotic swarm network for performing exploration of an unknown environment with maximum coordination and utility of robot swarm. This project works to solve a major issue in multi-agent systems, specifically how to share information across a network. The algorithm developed involved the use of a neural network that was able to optimize distributing information between robots through various parameters such as the amount of data that they were currently storing as well as their position to the swarm. This culminated in the idea that robots that are storing a lot of data should be used less for exploration, while the robots that have a lot of room to store information should be used more. The second project involved developing a VR app to control swarm in autonomously pushing items to locations specified in the app. I helped develop the VR app used to implement this project, as well as helped to convert the project into the Buzz programming language.

In addition to these projects, I also worked on a multi-agent robotics project for NASA JPL called the PUFFER project, more of which can be found under work experience.

Description of skills:

• Developed ranking algorithm to distribute tuples across a robotic swarm network for performing exploration of an unknown environment with maximum coordination and utility of robot swarm.

• Developed VR app to control swarm in autonomously pushing items to locations specified in the app.